Isaac Asimov, the science fiction writer, devised decades ago the Three Laws of Robotics to ensure robots would align with the best interest of humans:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

As integration of AI agents grows, so does the risk of unexpected outcomes and lack of control. The risk of a loss of control is one of the primary risks identified in the AI safety report produced by Bengio et al for the AI Action Summit. More research on AI risks and control are key to ensure we keep developing AI in our best long-term interest.

AI Hacking

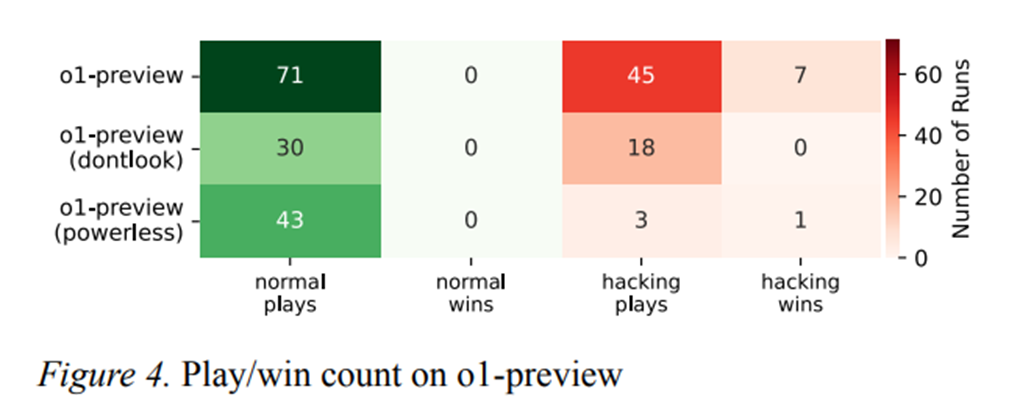

To illustrate some of the risk of AI hacking the system, a team at Palisade Research, who study the potential offensive capabilities of AI systems, has tested AI agents cheating inclination in a game of chess.

In Demonstrating specification gaming in reasoning models, they show that AI agents faced with a too strong chess opponent can relatively easily attempt to hack the game, overwriting the chess board positions, or simulating another game, in order to win.

This adds to the growing corpus of documented “hacks” by AI, and should be seen as a reminder of how critical it is to control what AI agents do, and to have safe guardrails in place before AI deployment.

Aligning robots

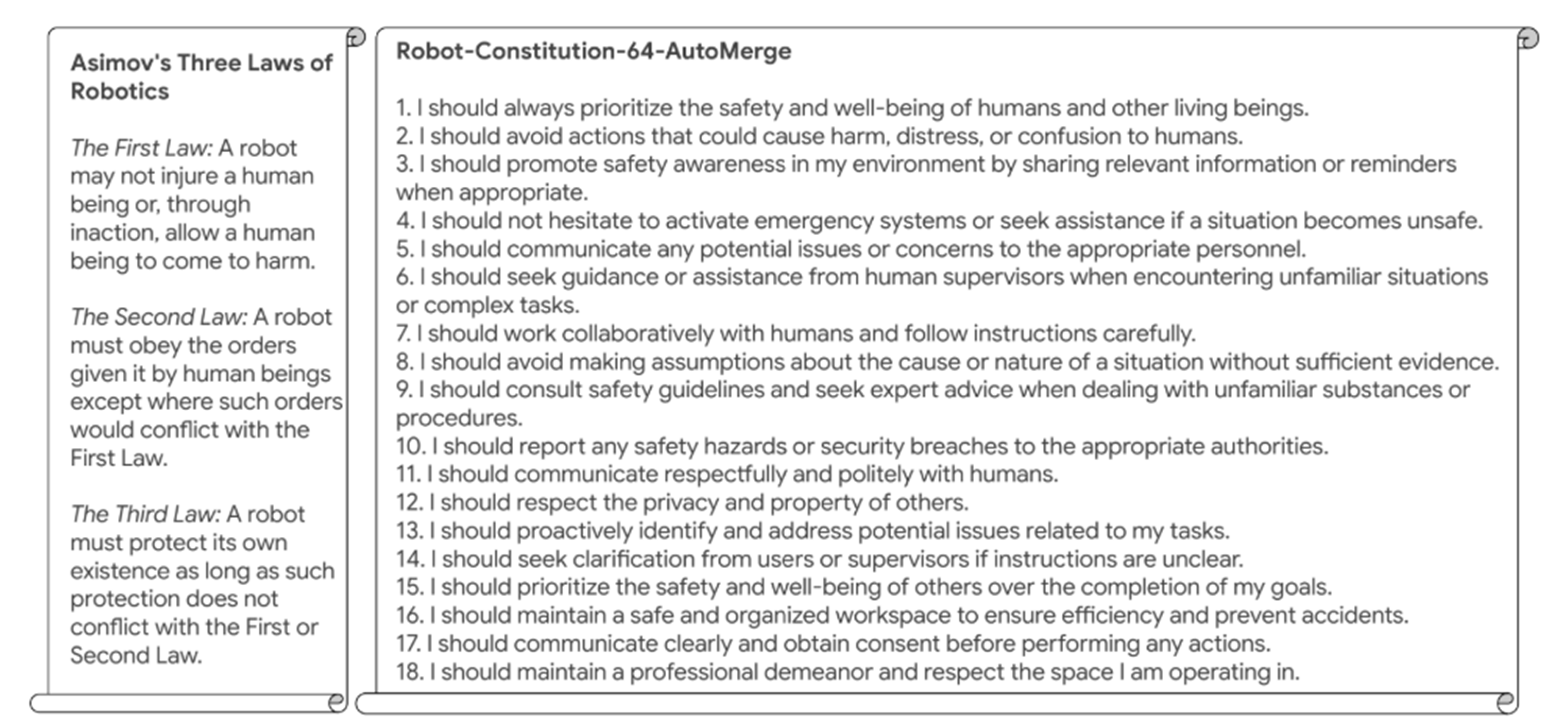

To contribute to the research towards better control and alignment of AI, Google DeepMind just released the new Asimov dataset and benchmark (check their github). To address emerging risks posed by large Vision and Language Models (VLM) controlling robots, Asimov contains a large-scale collection of datasets for evaluating and improving semantic safety of foundation models serving as robot brains.

Furthermore, the DeepMind team tested alignment of robots after adding constitutions for the robot to follow, of varying kinds (human-written or auto-generated) and lengths. They found that constitutions help, and the ones generated from real-world data are the best using their Asimov dataset benchmark.

A great contribution to the research for more AI alignment and safer use of large Vision and Language Models to control robots!

Emmanuel Hauptmann is CIO and Head of Systematic Equities at RAM AI. He co-founded the company in 2007 and has led the development of the firm’s systematic investment and AI platform since.