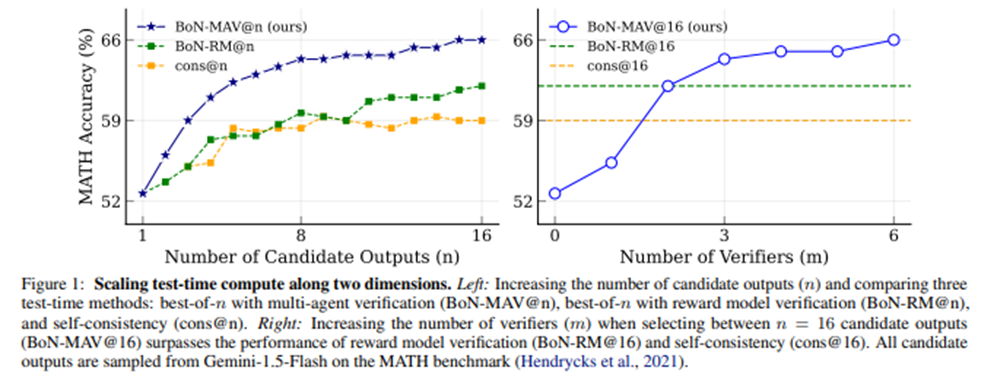

Large Language Models (LLMs) can be improved by using more time and compute before coming up with a better answer (cf. The key to Intelligence: “Wait…” – AIF4T). One strategy to improve LLM outputs is to use verifiers which evaluate candidate outputs to return the best ones. This paper looks at an interesting new approach, which seeks to increase the number of verifiers. These can potentially based on different language models, eg. with GPT-4o-mini or Gemini-1.5-Flash for instance, having different aspects of the output to verify (eg. mathematical correctness, logical soundness, factuality,…) or a different verification strategy (direct validation of output, rephrasing, step-by-step check, edge cases…).

The method leads to superior results than single agent verifiers and is inspiring in the way it uses variations of LLMS to come up with better outcomes. Check it out: [2502.20379] Multi-Agent Verification: Scaling Test-Time Compute with Multiple Verifiers.

Emmanuel Hauptmann is CIO and Head of Systematic Equities at RAM AI. He co-founded the company in 2007 and has led the development of the firm’s systematic investment and AI platform since.