Alibaba announced today the release of QwQ-32B, a compact reasoning model which delivers remarkable performance for its size.

The Alibaba team based the model on Qwen2.5-32B, which as a foundation model was already topping leaderboards in performance across open source models of its size. Probably inspired by the DeepSeek success, the team explored the scalability of Reinforcement Learning to enhance the intelligence of the model and produce a strong reasoning model.

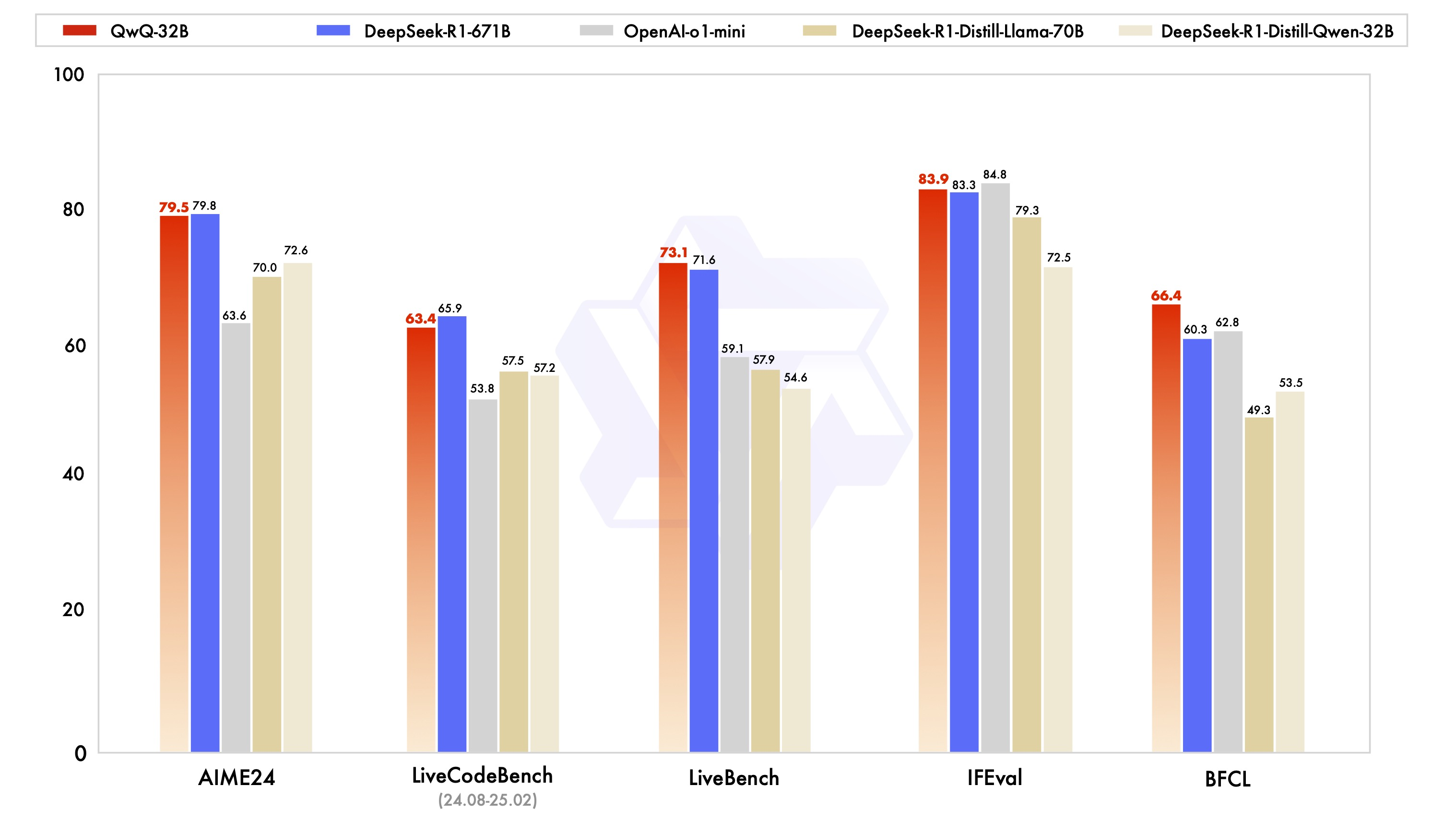

As a reasoning model, QwQ-32B achieves performance comparable to DeepSeek-R1 despite being twenty times smaller in parameters (and with slightly less active parameters), with great results across coding, maths and other tasks (see below). The results demonstrate the potential of RL to produce more powerful and efficient models and are shifting the frontier of efficiency for open source models!

You can already access QwQ-32B on Huggingface here.

Emmanuel Hauptmann is CIO and Head of Systematic Equities at RAM AI. He co-founded the company in 2007 and has led the development of the firm’s systematic investment and AI platform since.