Today, Large Language Models (LLMs) can efficiently represent human text and symbolic (mathematical and programming) languages.

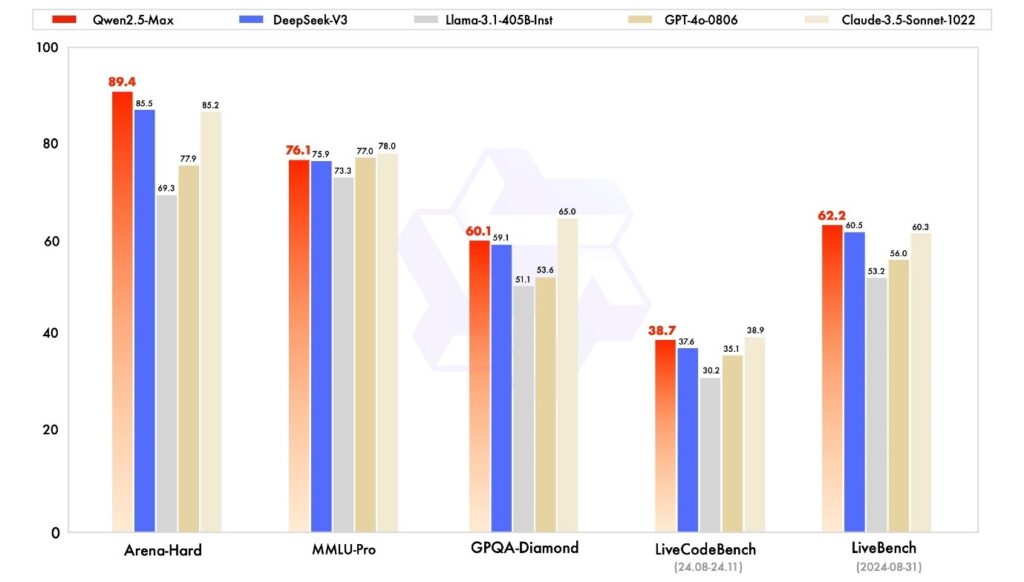

In January, DeepSeek put the spotlight on Chinese AI, which has consistently delivered some of the best LLMs in the last few years. The Qwen team, in charge of Alibaba’s LLM lab, has been at the forefront of Chinese AI. They announced their latest Qwen2.5-Max model on January 28th, allowing for the below benchmarks; MMLU-Pro, which tests knowledge through college-level problems, LiveCodeBench, which assesses coding capabilities, LiveBench, which comprehensively tests the general capabilities, and Arena-Hard, which approximates human preferences.

Reasoning Models

Globally, AI labs are pushing beyond text representation and basic text generation by LLMs, striving to develop models that emulate human-like thinking processes. Their focus is on reasoning models, designed to enable more advanced reasoning, planning and decision-making. The goal is to create AI capable of handling increasingly complex tasks, such as generating extensive codebases, building entire websites, and producing deeper, more nuanced content.

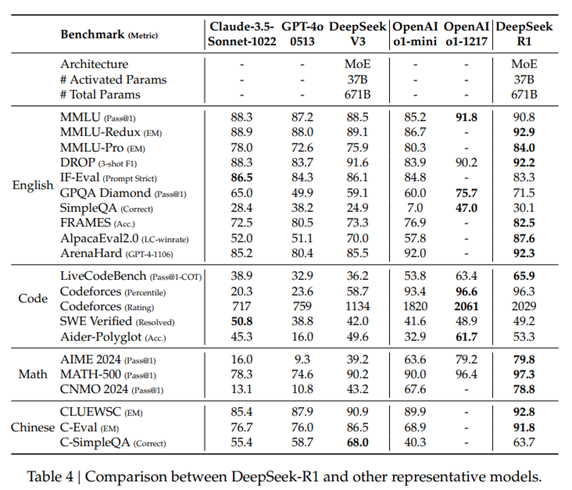

DeepSeek-R1 MoE

Source: DeepSeek [2501.12948] DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

Open-Source Models

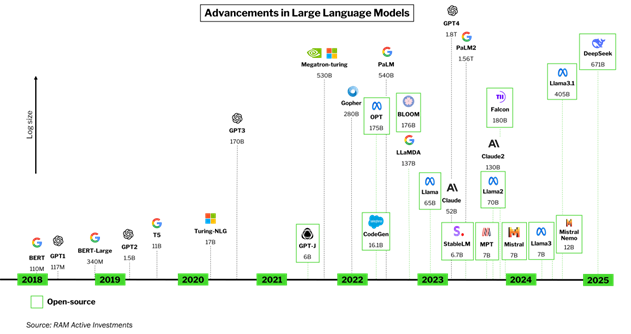

For years, smaller open-source models have closely followed state-of-the-art models, achieving comparable performance with just a fraction of the parameters and computational requirements. The era of exponential model scaling appears to be coming to an end, as more efficient learning techniques replace brute-force scaling.

Recently (as detailed below), the open-source community has focused on developing smaller models that maintain accuracy close to their much larger counterparts—yet are 100 times smaller and far more economical to train and deploy.

Key players in this space include Meta (Llama models), Google (Gemma), Alibaba (Qwen), Mistral (Mixtral), and now DeepSeek, which, along with R1, has introduced distilled versions of its models in various sizes, similar to how Llama and Qwen models have been scaled down.

Emmanuel Hauptmann is CIO and Head of Systematic Equities at RAM AI. He co-founded the company in 2007 and has led the development of the firm’s systematic investment and AI platform since.